Abstract

TL;DR: Plug-and-play high-dimensional extension of 3DGS for

dynamic scenes.

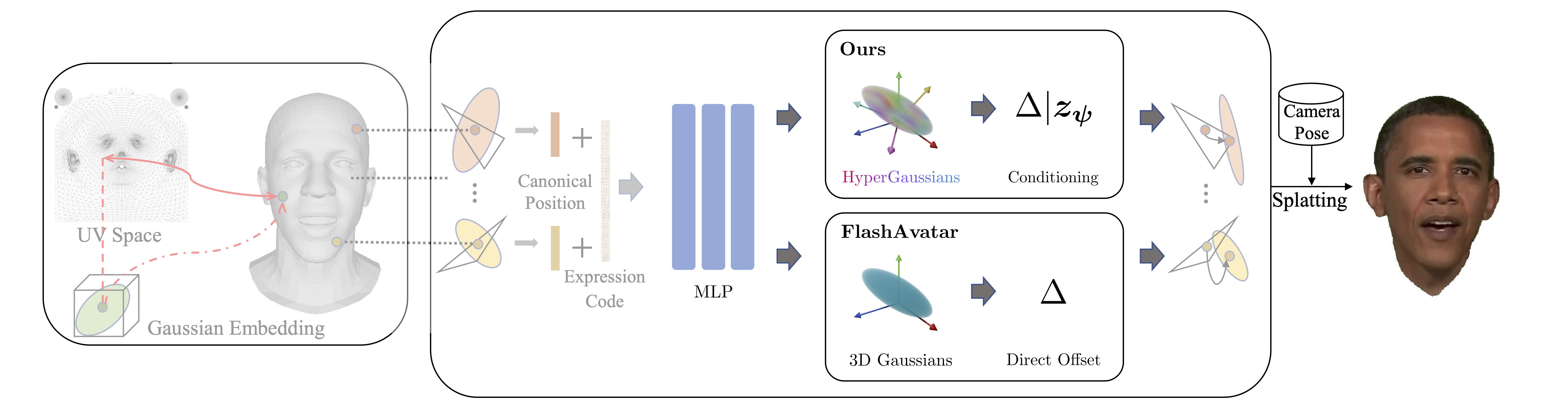

We introduce HyperGaussians, a novel extension of 3D

Gaussian Splatting for

high-quality animatable face avatars. Creating such

detailed face avatars from videos is a challenging problem and has

numerous applications in augmented and virtual reality. While

tremendous successes have been achieved for static faces,

animatable avatars from monocular videos still fall in the uncanny

valley. The de facto standard, 3D Gaussian Splatting (3DGS),

represents a face through a collection of 3D Gaussian primitives.

3DGS excels at rendering static faces, but the state-of-the-art

still struggles with

nonlinear deformations, complex lighting effects, and fine

details. While most related works focus on predicting better Gaussian

parameters from expression codes, we rethink the 3D Gaussian

representation itself and how to make it more expressive. Our

insights lead to a novel extension of 3D Gaussians to

high-dimensional multivariate Gaussians, dubbed

'HyperGaussians'. The higher dimensionality increases expressivity

through conditioning on a learnable local embedding. However,

splatting HyperGaussians is computationally expensive because it

requires inverting a high-dimensional covariance matrix. We solve

this by re-parameterizing the covariance matrix, dubbed the

'inverse covariance trick'. This trick boosts the efficiency so

that HyperGaussians can be

seamlessly integrated into existing models. To demonstrate

this, we plug in HyperGaussians into the state-of-the-art in fast

monocular face avatars: FlashAvatar. Our evaluation on 19

subjects from 4 face datasets shows that HyperGaussians outperform

3DGS numerically and visually, particularly for

high-frequency details like eyeglass frames, teeth, complex

facial movements, and specular reflections.

Please use the links in the navigation bar to quickly jump to

results.

We recommend using Chrome for playing the videos.